Why Softmax not used when Cross-entropy-loss is used as loss function during Neural Network training in PyTorch? | by Shakti Wadekar | Medium

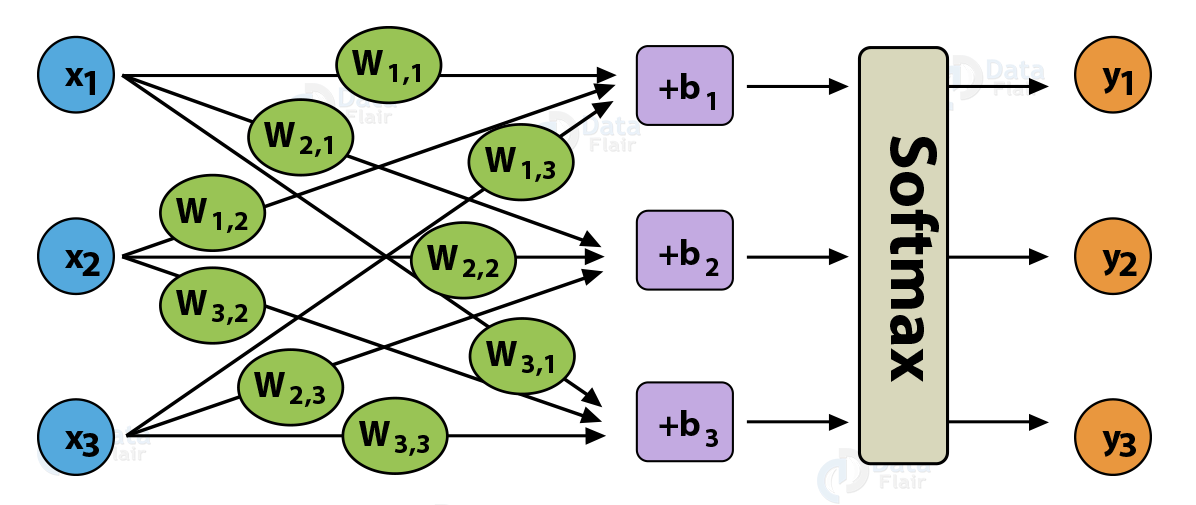

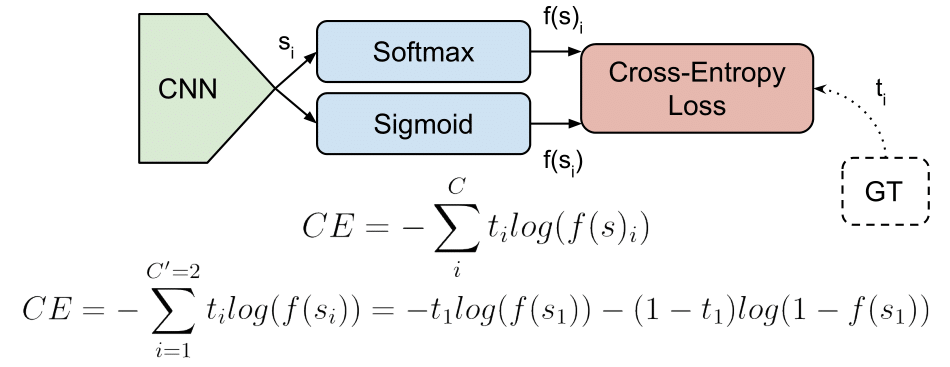

Convolutional Neural Networks (CNN): Softmax & Cross-Entropy - Blogs - SuperDataScience | Machine Learning | AI | Data Science Career | Analytics | Success

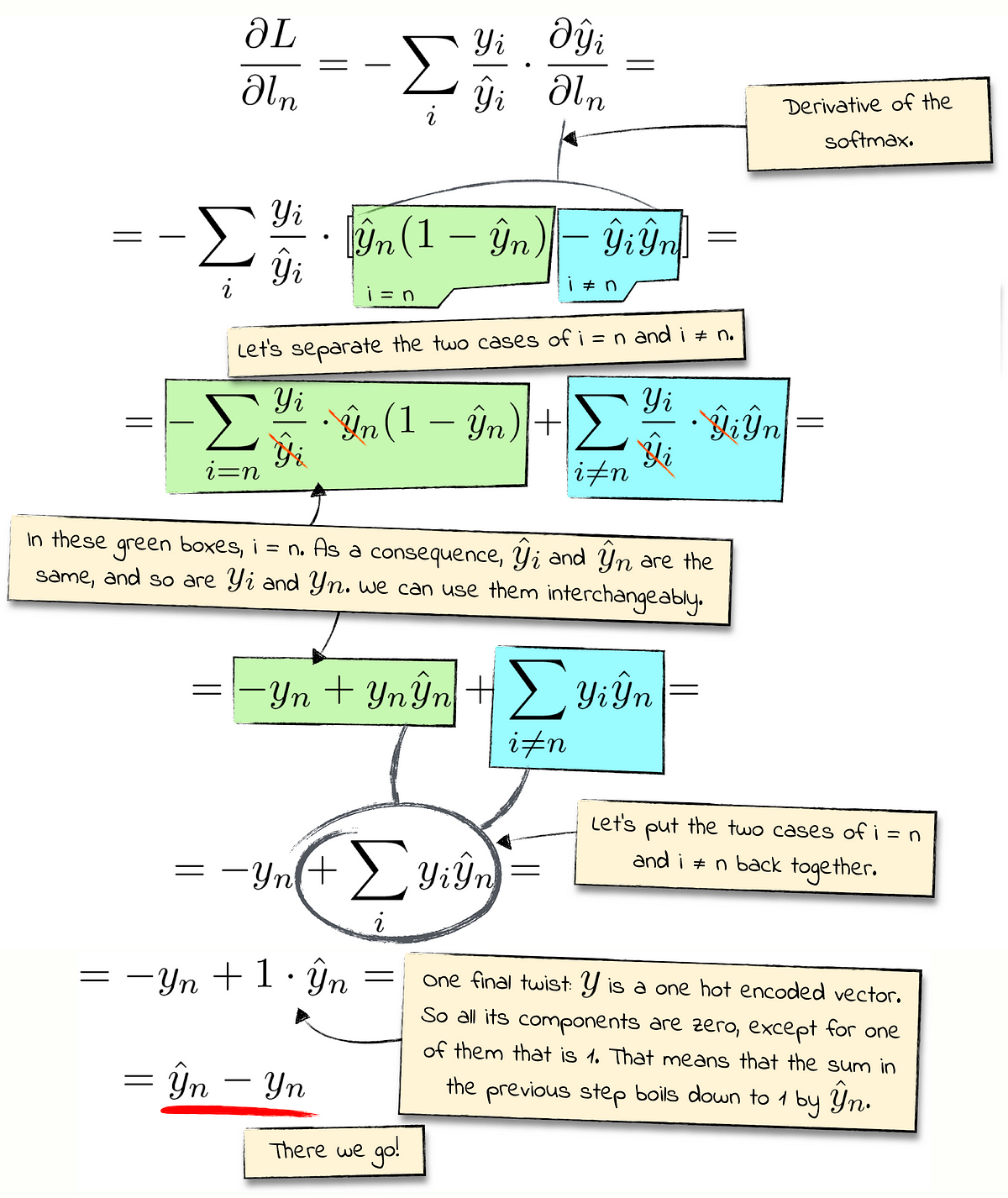

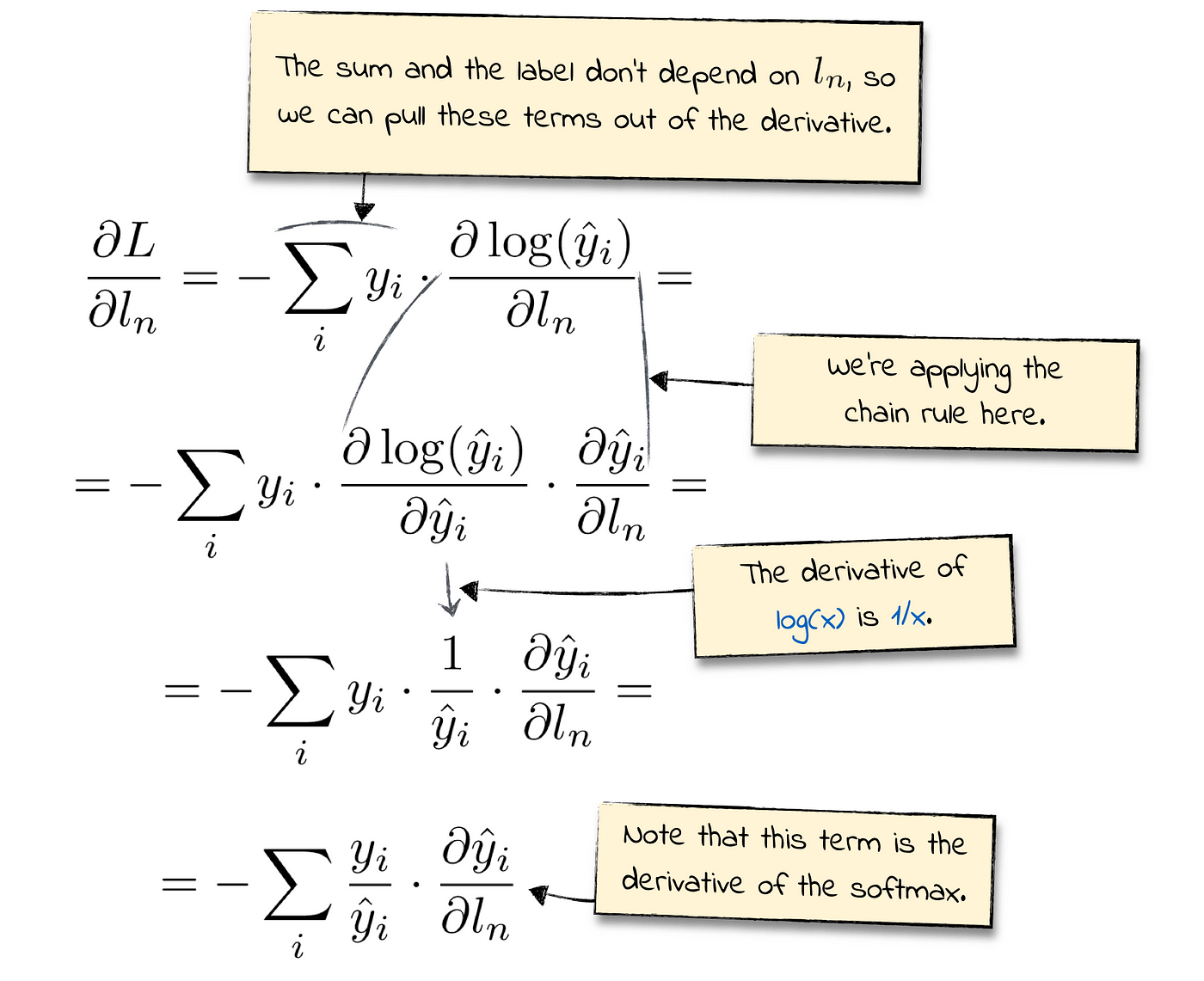

Sebastian Raschka on X: "Sketched out the loss gradient for softmax regr in class today, remining me of how nicely multi-category cross entropy deriv. play with softmax deriv., resulting in a super

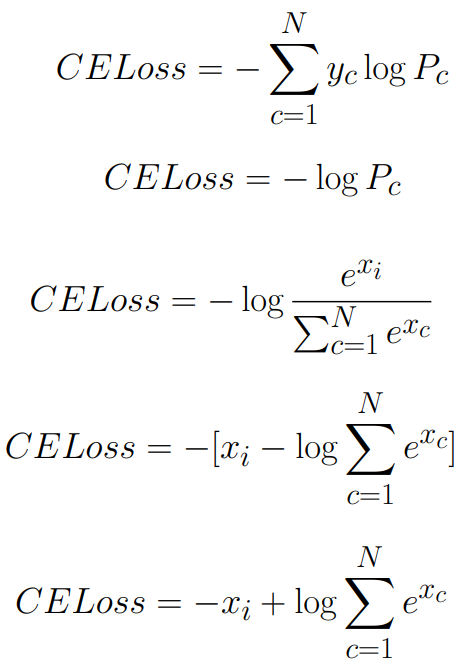

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

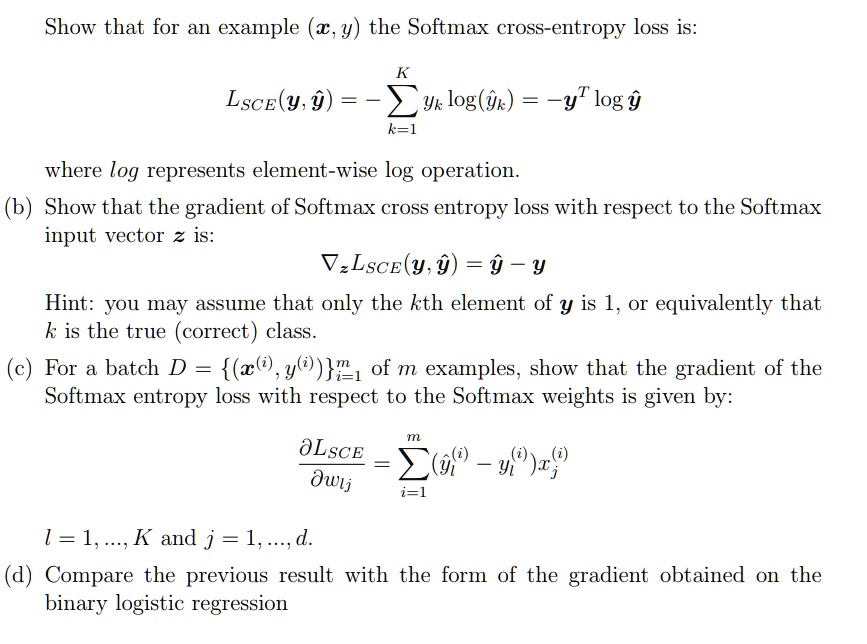

SOLVED: Show that for all examples (€,y), the Softmax cross-entropy loss is: LsCE(y; y) = -âˆ'(yk log(ik)) = - yT log(yK), where log represents the element-wise log operation. (b) Show that the

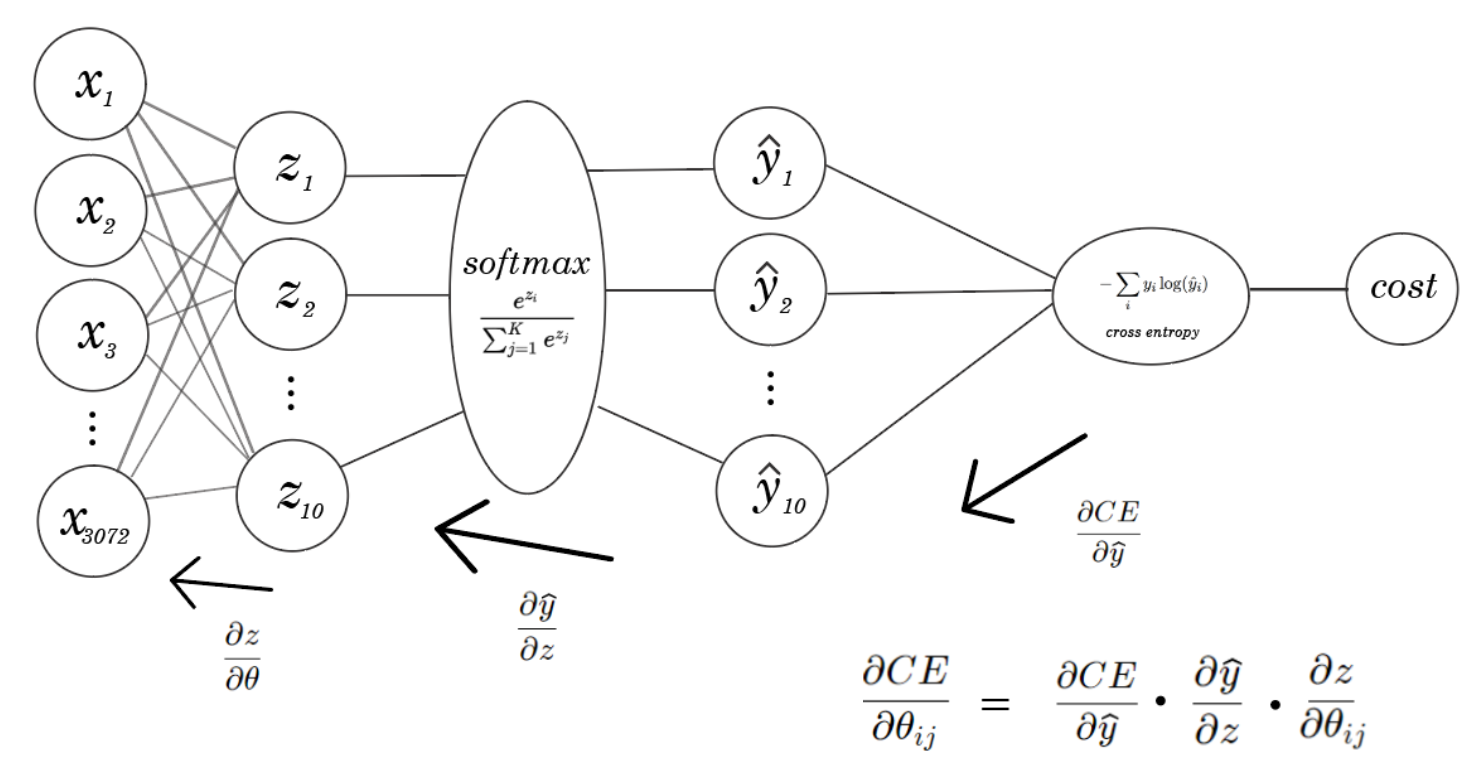

Gradient Descent Update rule for Multiclass Logistic Regression | by adam dhalla | Artificial Intelligence in Plain English

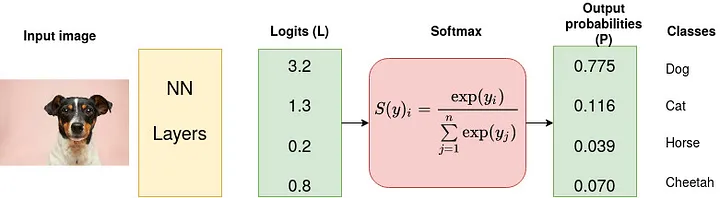

Understanding Logits, Sigmoid, Softmax, and Cross-Entropy Loss in Deep Learning | Written-Reports – Weights & Biases

![DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube](https://i.ytimg.com/vi/ILmANxT-12I/hq720.jpg?sqp=-oaymwEhCK4FEIIDSFryq4qpAxMIARUAAAAAGAElAADIQj0AgKJD&rs=AOn4CLDP2Mcvs9IEnETkFGUgaLNZ2t-iGg)